The views expressed in this post are my own and do not represent an official NASA position on the Google Glass technology.

I picked up my Glass on Thursday, and as I was taking the train back to my parent’s house in Boston I noticed the Moon out the window. Being a space geek it struck me that 44 years ago the Apollo 11 crew was midway in their journey from the Earth to the Moon using computers that run like a snail compared to Google Glass (The Apollo Guidance Computer ran at 1.024 MHz).

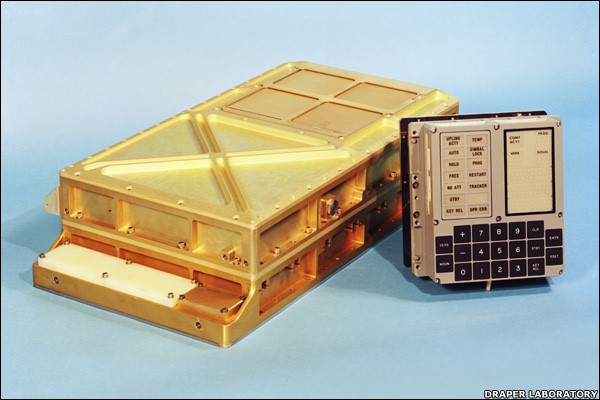

The final version of the AGC. On the left is the main computer, while on the right is the DSKY unit used for input and output of data.

The Apollo computers were primitive by today’s standards and yet we got to the surface of the Moon and back safely six times. Now as I wear my Glass for the past 48 hours I marvel at how far we have come. Gone are the days of imputing nouns and verbs on a keyboard, now we have a voice, swipe, and tap.

“Houston, Tranquility Base here. The Eagle has landed.

…OK, Glass Google Apollo 11.”

In 44 short years, computers have gone from punch cards and occupying whole rooms to fit on my face. While computers have advanced dramatically, the US space program, unfortunately, got stuck in the shallow waters of Low Earth Orbit. We flew a shuttle and built a space station, yet we no longer have the capabilities to send a crew to the Moon or beyond. We once inspired a generation with our Moonshot; now the Moonshot thinking seems to be more in Google’s court and the commercial space companies.

Pondering the implications of Glass at my pickup

I ran into a few folks as I roamed NYC, Boston, and Houston who knew what the Glass was, and they all were amazed by the concept. In a short time, I have had Glass it has been pretty cool, though I really think I have just barely started to scratch the surface of what is possible for the public or for NASA. I know some here at JSC have been working on Augmented Reality and how Glass-like devices could be used by the space station, but they are looking more for the Terminator Vision version than Glass as is.

midday alert for an upcoming pass.

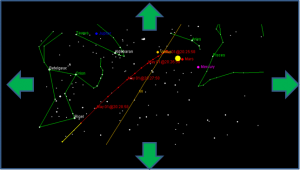

I’m not sure whether NASA will embrace Glass, but from Mission Control, and the ISS crew I can definitely see the ways we could use it, as well as Glassware for the public. Right now folks can get text alerts on when the ISS will pass overhead or they can look it up on the web, but what if skymap could be sent right to Glass and use the sensors to help the user orient themselves and spot the space station in the night sky?

ISS pass with compass and tilt sensors helping to orient the user point of view to spot the space station fly over.

Another outreach concept I have been thinking about is if there is a way to bundle the ISS Live Mission Control page as cards for Glass. How interested would folks be in getting alerts on the Space Station status? They could pick which consoles they are interested in (Flight Director, ADCO, SPARTAN, etc.) and get nominal status cards as well as alerts for off-nominal conditions and upcoming events such as dockings, and spacewalks.

ISS Live Consoles you could choose to get updates from

It was interesting as I walked around the past two days and noticed so many people head down in their phones or blocking their whole face with a giant iPad taking pictures and they seemed odd to me now that a lot of the info comes right to my eye FOV and the unobtrusive camera at the ready. I am looking forward to seeing how folks at work react on Monday and what other ideas we come up with for potential internal as well as outreach use cases. In the end, Glass is still in the early stages for both hardware and software, but the potential to revolutionize our lives is there.

And maybe that’s just the moonshot we need at this point.